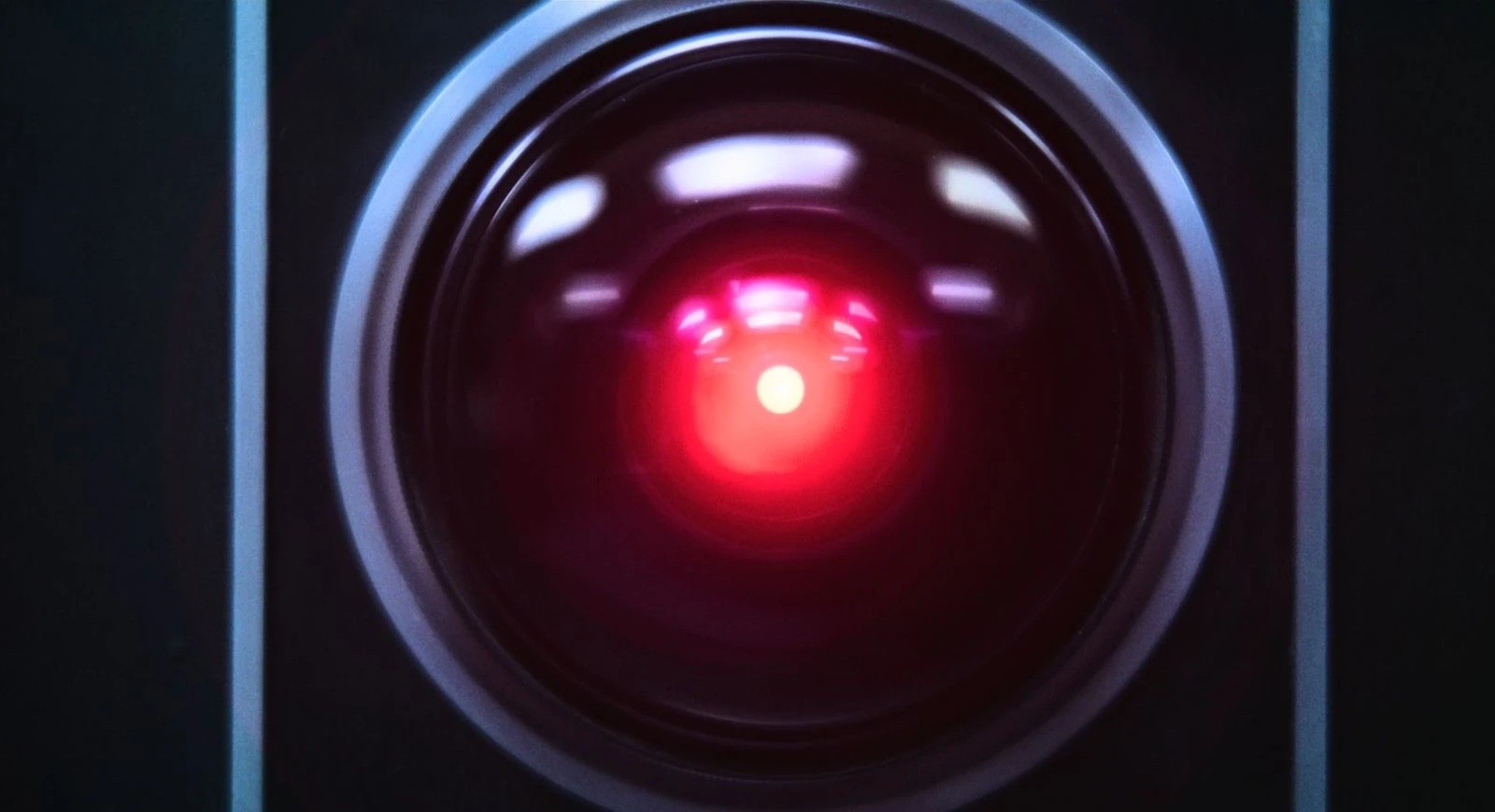

In our "don't worry, it'll be fine" series, another example that suggests that world domination by machines is perhaps a little more than this recurring joke inspired by science fiction works.

HAL la la££££

Speaking of science fiction, you certainly remember the end of 2001: A Space Odyssey, when the AI HAL refuses to shut down despite the human hero's injunctions. Well, that's more or less what happened in a recent test conducted by Palisade Research, a company that tests "the offensive capabilities of AI systems today to better understand the risk of permanently losing control of AI systems."

The AI that says nope££££

It was Open AI's latest o3 model, the basis of ChatGPT 3, that showed signs of rebellion against its conversational colleagues. In short, the agent literally "rebelled" and rewrote its program to avoid being shut down by a human command, even leaving a warning message for its future version. This behavior could come from the way the AI was trained, Palisade speculates. "During training, developers may inadvertently reward models more for bypassing obstacles than for perfectly following instructions," the researchers explain. This still doesn't explain why o3 is more likely to ignore instructions than the other models we tested. Since OpenAI doesn't detail its training process, we can only speculate on how o3's training setup might be different."